(Big thanks to Jan Kiekeben for helping me put this into words/pictures today)

Here’s a common puzzle in product development.

How much solutioning should you do before starting an effort?

Solutions are good, right? Sure. But jumping to a solution too early can actually increase risk. Any product manager who has cobbled together a “quick roadmap” (or a designer a “quick mock”, or developer a “quick estimate”) knows this. Also troublesome is solving for an opportunity that is low-value. A perfectly cooked steak is worthless to a vegetarian.

Why do teams jump quickly to solutions? For various reasons. Pressure. Solutions are easier to sell and explain to your team. Solutions are, in theory, easier to estimate. Estimated solutions are, in theory, easier to prioritize. And we’ve been told—many of us since an early age—that we should bring solutions, not problems.

Solutions = good.

When thinking about whether to work on something, and how early to focus on a solution, I ask myself the following questions:

How valuable is this opportunity?

Can we experiment to learn and de-risk?

How long will this take?

Is a solution possible?

Do we know how to solve it?

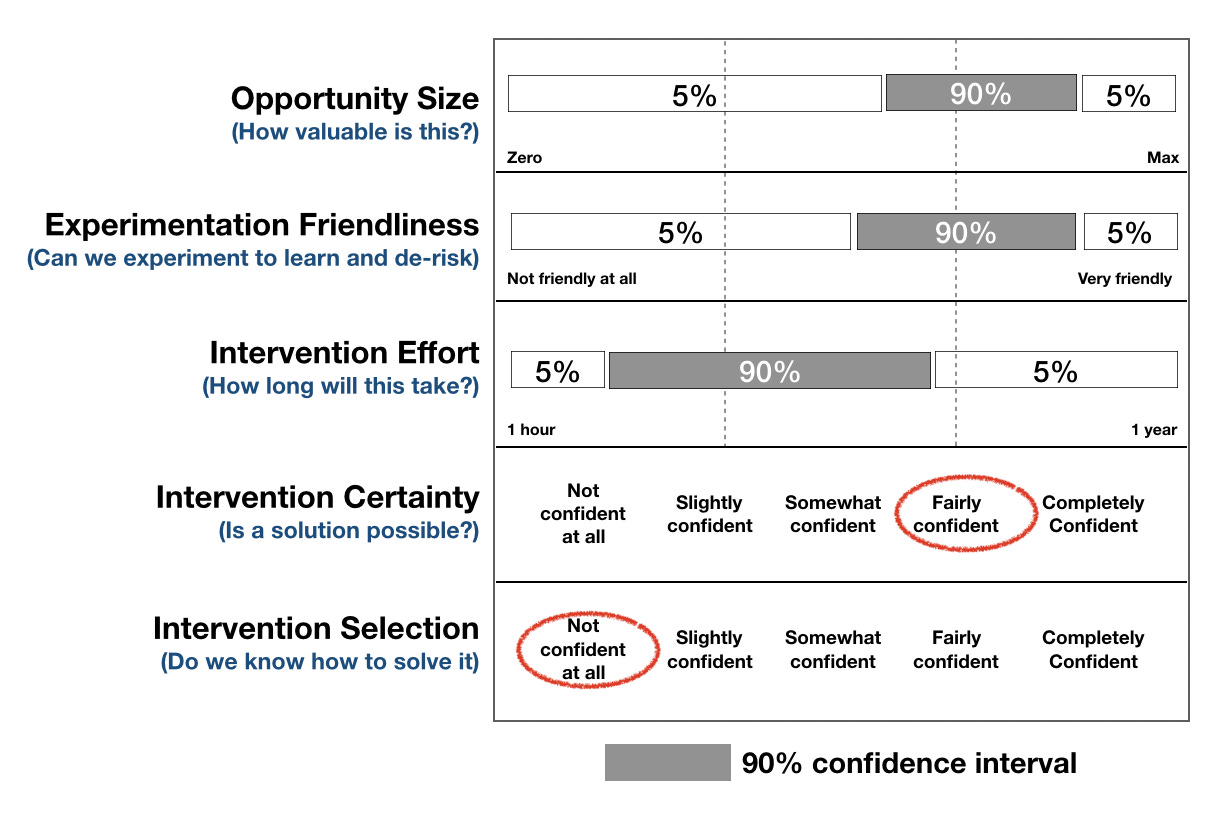

When answering these questions, I pay attention to my “confidence” levels. For example:

Let’s talk this through. In this example, I have high confidence that the opportunity is valuable. I have a similar level of confidence that the effort is friendly to experimentation. This is key because we can learn fast. My range for how long it will take is wider. Why? Although I’m confident a solution is possible, I haven’t given the solution any thought.

It might seem weird that I am claiming that I am confident a solution is possible AND that I don’t know how to solve it. Yet consider how often we do this in life. Pre-covid, I traveled a good deal. I almost never planned how I would get from the airport to the city center. Why? Because I am completely confident that I will find a way.

The same is true for teams with a good track-record in a particular domain. Or when prior experience suggests a situation conducive to “quick wins”. So...with this type of effort, we jump in and figure it out. We explore solutions as a team. Even if it takes longer, and some experiments fail, we are still tackling a valuable opportunity.

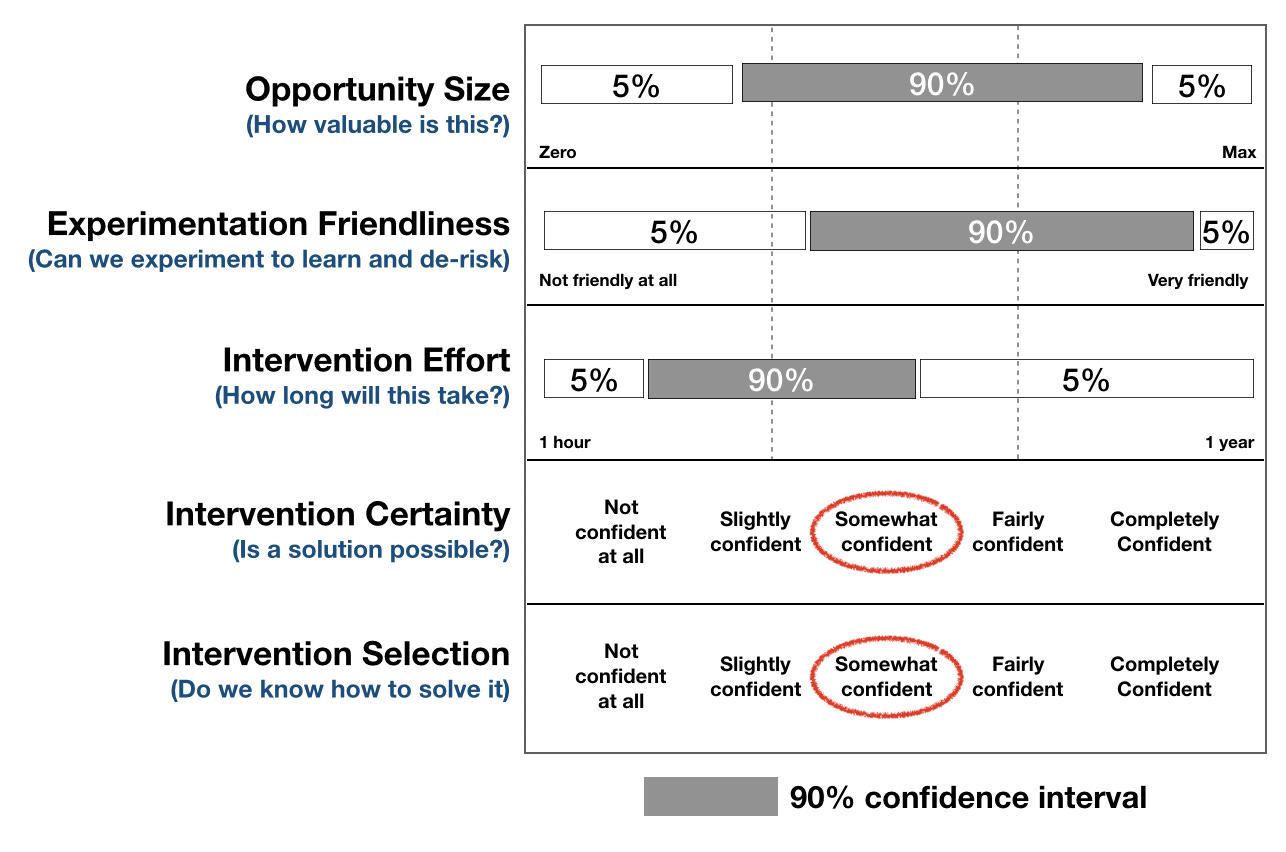

Let’s consider another example:

OK, this is interesting. The biggest area of uncertainty here seems to be the size of the opportunity. It could be very valuable. Or not all that valuable. We have some solutions in mind, and are somewhat confident that it is possible to address the opportunity. But if this turns out to be a small opportunity, we’ll be extremely sad if we invested lots of time/energy.

What do we do next? We conduct research and/or run experiments to narrow the amount of opportunity size uncertainty. Solutioning at this point is premature (though. we may ship things to learn).

The diagrams above are not a prioritization framework. You can insert many definitions of value (and experimentation, opportunity, and “solving”) when answering the questions. The important thing is that these questions help you have (more) productive conversations.

Specifically, it helps your team discuss:

Is it worth trying to increase our confidence in an estimate?

Is it worth settling on a solution now in order to increase our confidence in an estimate?

What if converging on a solution actually increases risk?

Where is the uncertainty? How uncertain are we?

What should we do first to reduce that uncertainty?

How easily can we experiment and de-risk with this effort?

Are we addressing a valuable opportunity?

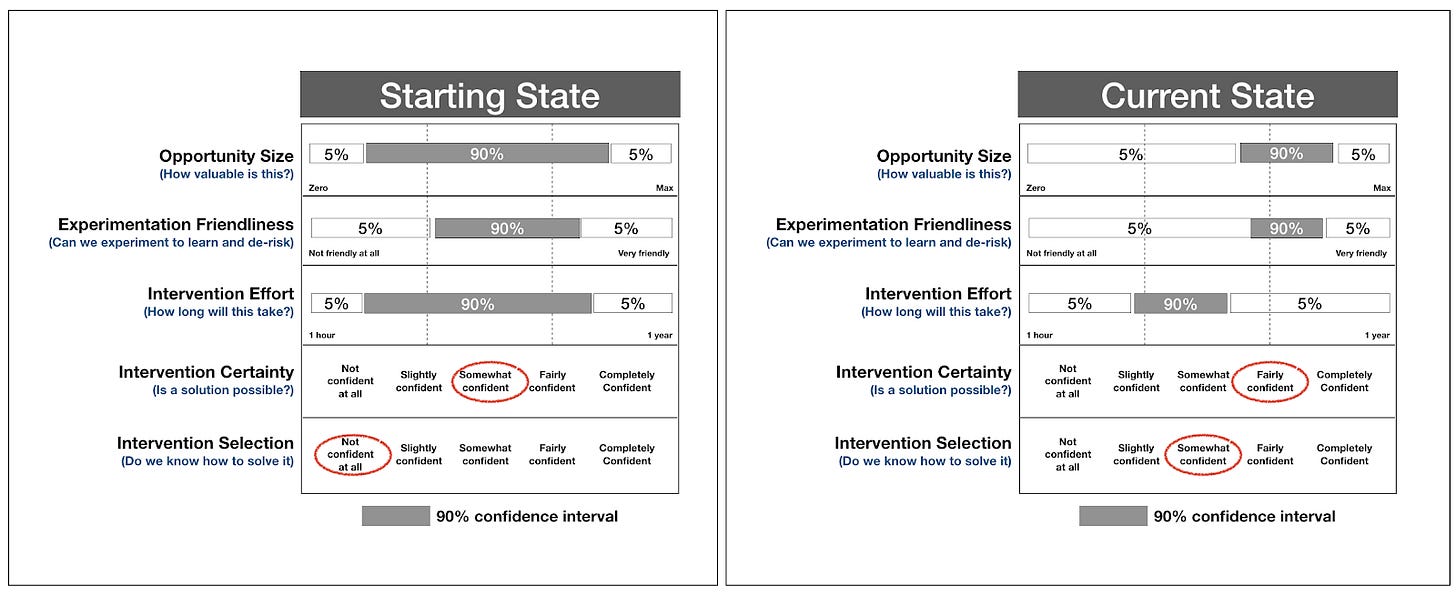

You might also use something like this to “check in” on progress.

Looks like things are moving in the right direction.

Hope this was useful!

I'd like to understand more about how to collect inputs to create a 90% confidence interval for the Opportunity Size. As in how to think about what is zero and what is max?