TBM 45/52: Taming Model Malpractice

Join me October 13th for a talk on Drivers, Constraints, and Floats.

Customer profiles, ICPs, segments, personas, jobs-to-be-done maps, customer health models, product health models, competitive ecosystem maps, 2x2s, 3x3s, maturity models, retention models, prioritization models, Kano Model, Porter's Five Forces Framework, Moore's technology adoption lifecycle curve. Models, models, models!

I'm back home after a team offsite, and my head is swimming in models.

It got me thinking about the models we use and how we use them.

Obligatory George Box quote:

All models are wrong, but some are useful.

George E. P. Box

Let's start expanding on that statement.

If:

All models are wrong, but some are useful; and

Usefulness is relative to the person using the model

Then:

We have to consider the "job" of the model.

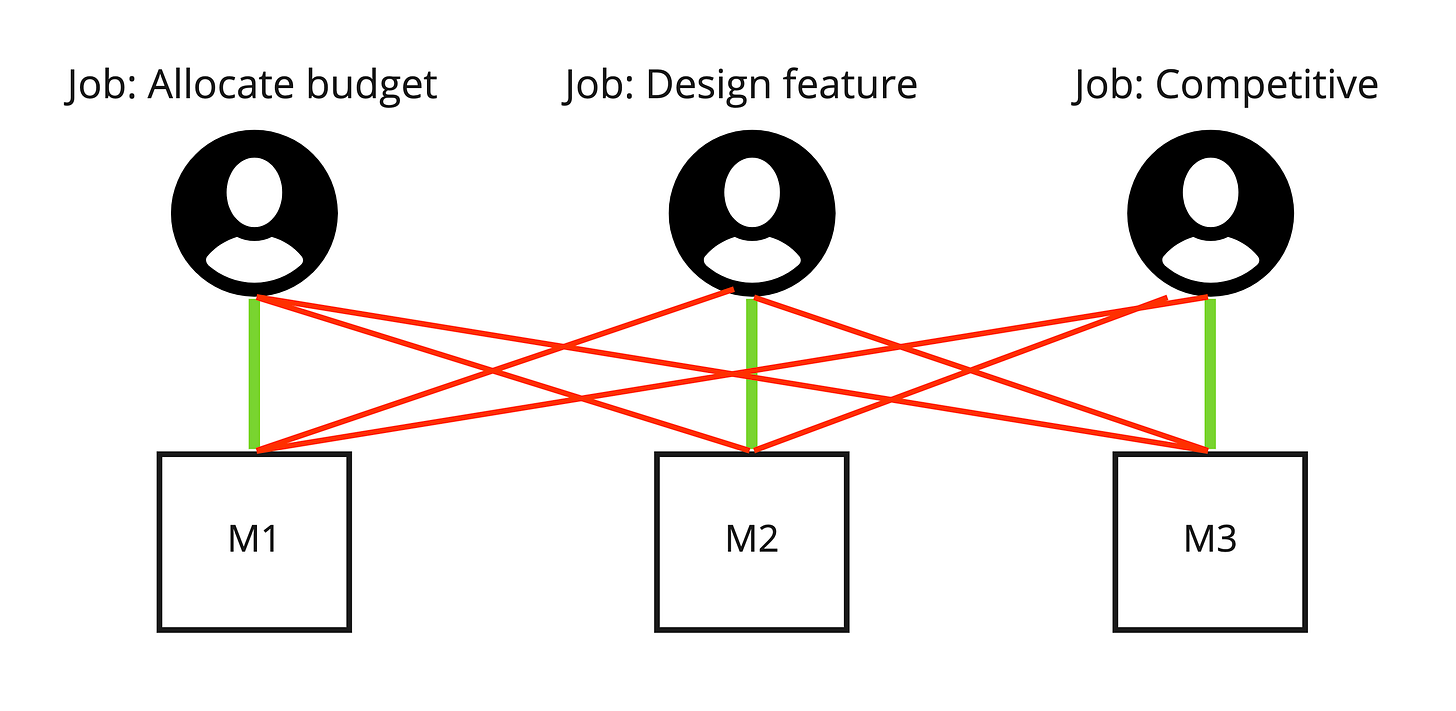

A model designed to help a marketer allocate their budget optimally will probably do a less-good job helping a designer inform the design of a feature (for example). And the model designed to help the designer will probably do a less-good job at painting a picture of the competitive landscape. The job matters.

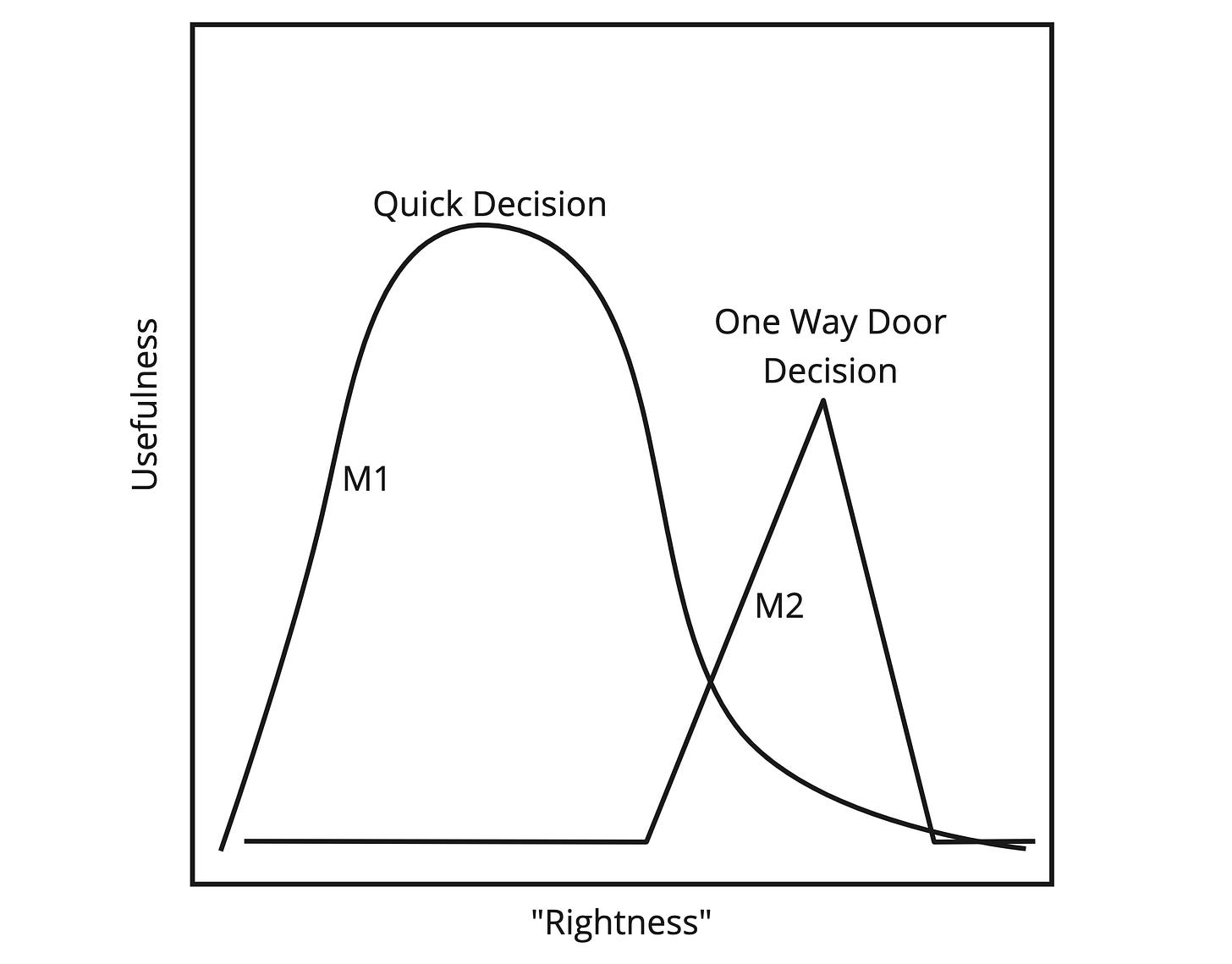

The rightness and wrongness of a model are in service to the "job" of the model. A highly simplified and "wrong" model might be perfect for making high-level (and recoverable) product investment decisions in a hurry. Making the model "righter"—more accurate, more resembling the real world—will have a negative effect. It will slow things down by presenting too much information and increasing cognitive load. But the same model will fall flat at non-recoverable "one-way door" decisions with many dependencies.

In that sense, there are better and worse models—with varying degrees of rightness.

There are two opposing antipatterns I've noticed in companies when it comes to model-use:

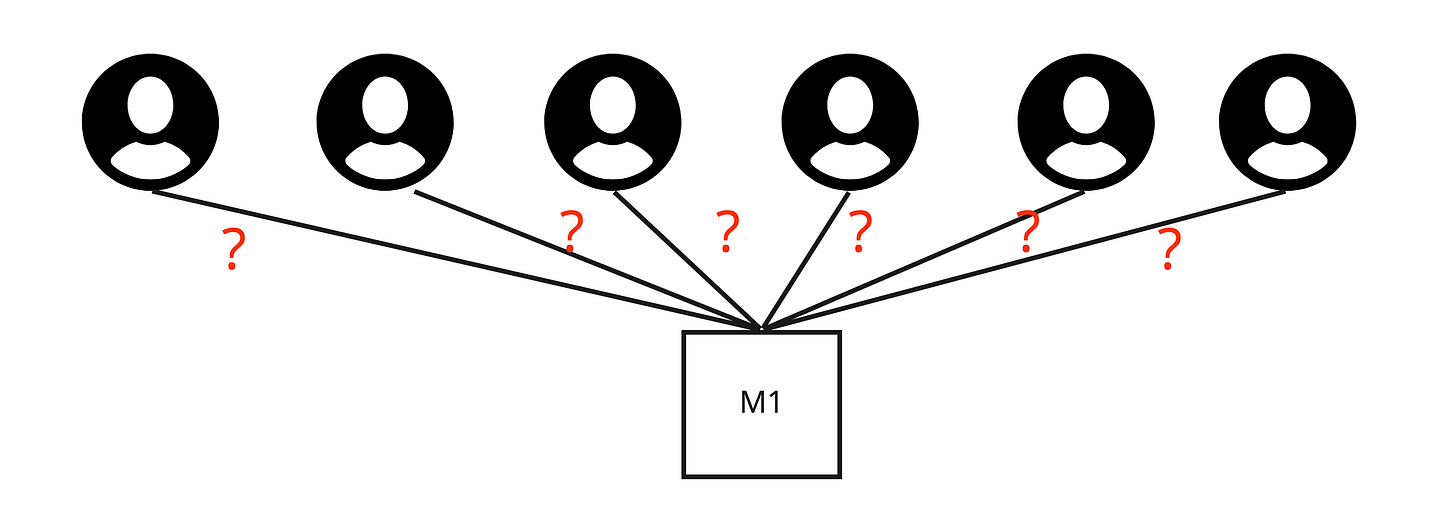

Using too many models

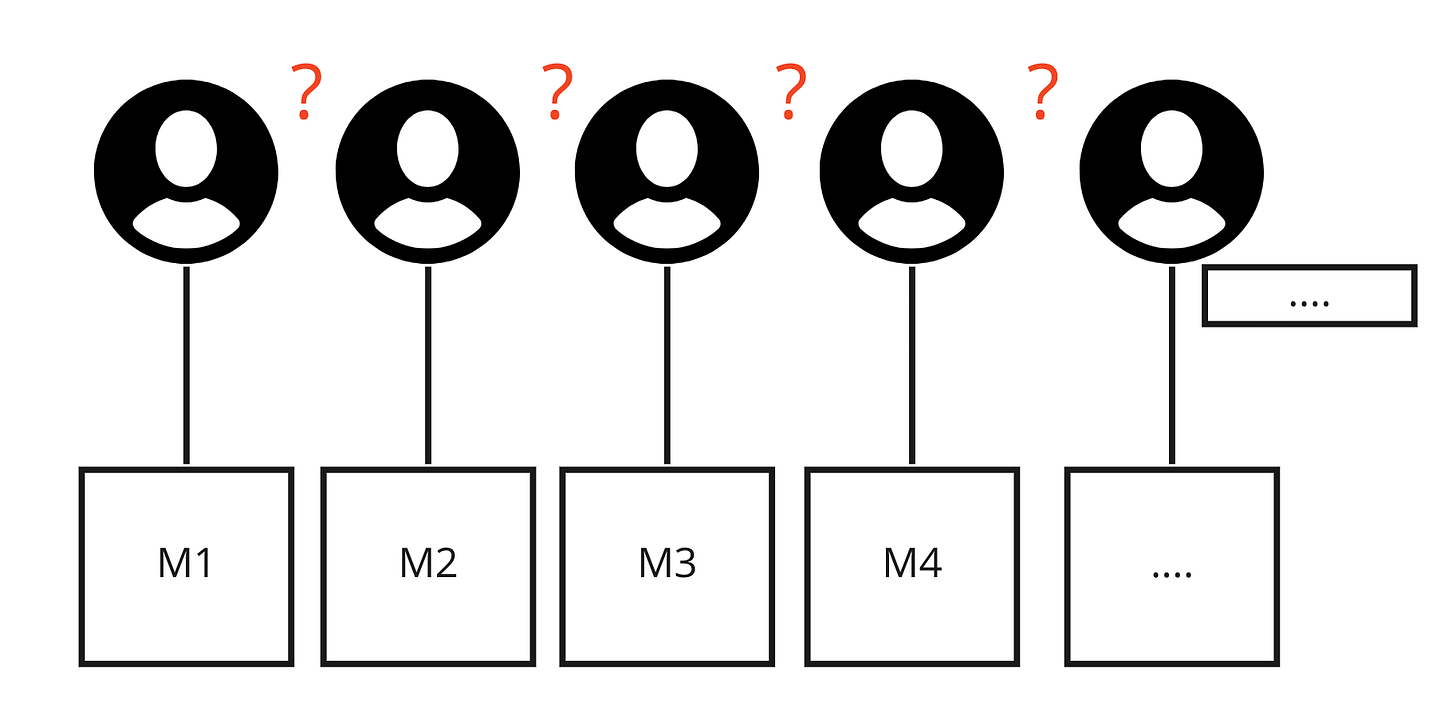

Using too few models (for too many jobs)

With the first antipattern, we hear things like "no one speaks the same language" and "I have to spend all of my time translating between different models!" I have observed companies with a product health score (Product), customer health score (CS), AND a churn prediction model (Finance). They all did their respective jobs well, but it was hard to context switch.

The second we hear things like "we try to use the same words for everything," "that doesn't help me," and "well, that's the official way, but we kind of ignore it." The flipside example is a neat-and-tidy set of global customer personas that no one used or cared for.

Too many models ensure the models are doing specific jobs at the expense of broad understanding. Too few models ensure people use the same language at the expense of doing jobs well. It's a balancing act. Job-fit models vs. collaboration-fit models—both "useful" in context.

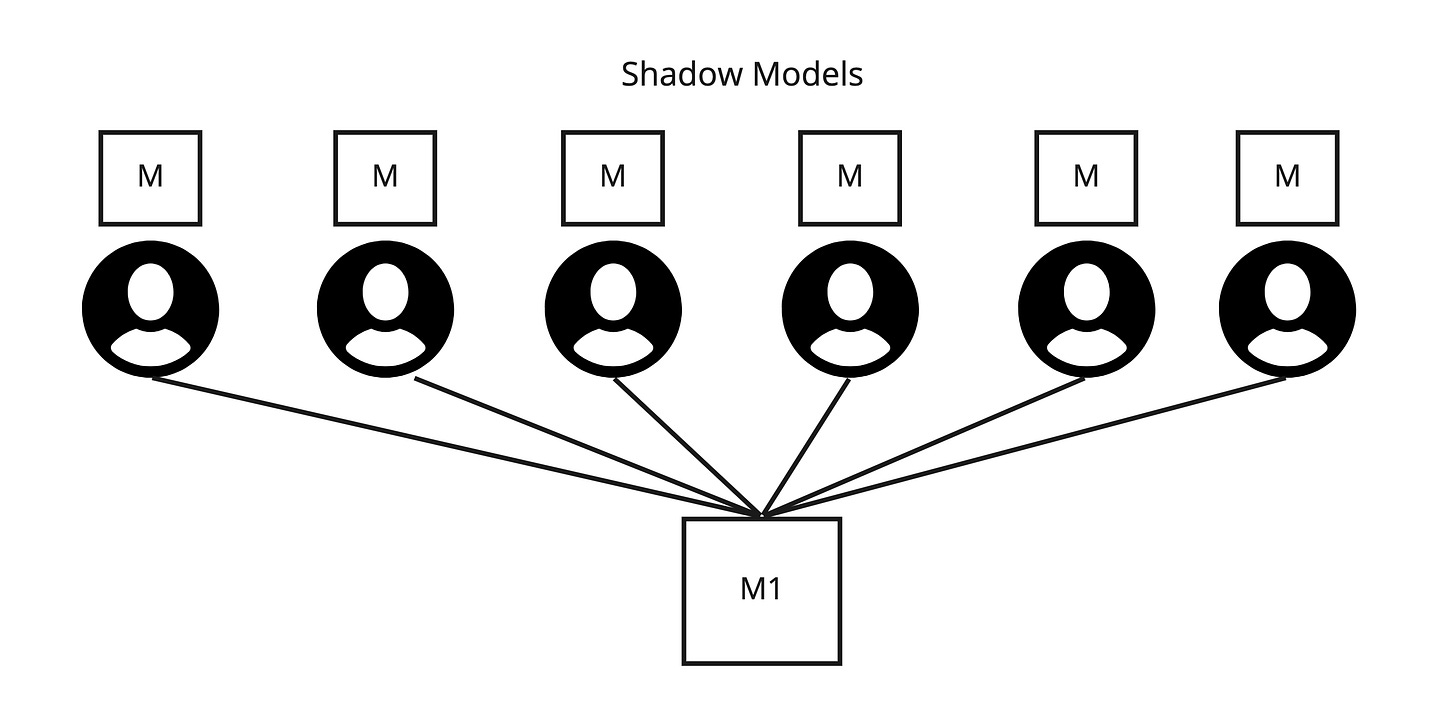

Using too few models (for too many jobs) involves an interesting twist. The local models still crop up. Why? To do the local job, you'll need local models AND a mapping to the global models. So when someone in your company says, "we need consistency between X," they are actually saying, "at the global level, we need consistency between X, but please handle the complexity and adapt locally!" Take the global customer personas mentioned above. Each team had a shadow set of personas they used locally. It was the only way to get any work done.

Put another way: "let's keep it simple" only creates the appearance of simplicity at the global level. People will constantly adapt to their local job (if they are allowed to and can get away with it).

I would add a third antipattern: not being specific about the model's job, even if the goal is general applicability.

But there are two reliable ways to counteract these antipatterns:

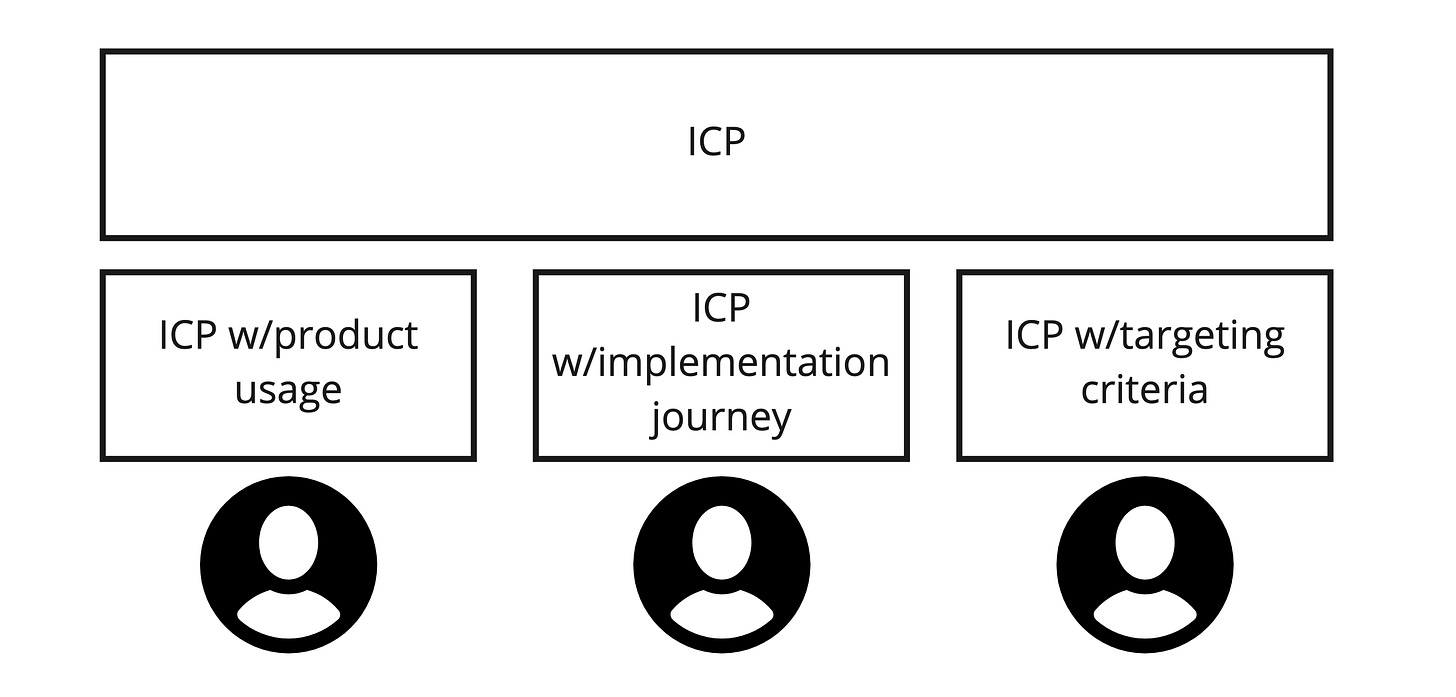

The first is a hierarchy of complementary models. For example, a company might decide on a global ICP (ideal customer profile). Each department then expands on that model to account for local concerns. Product adds more in-depth product usage characteristics. Professional services dig into the implementation journey.

The benefit here is that we acknowledge the local models but seek coherence.

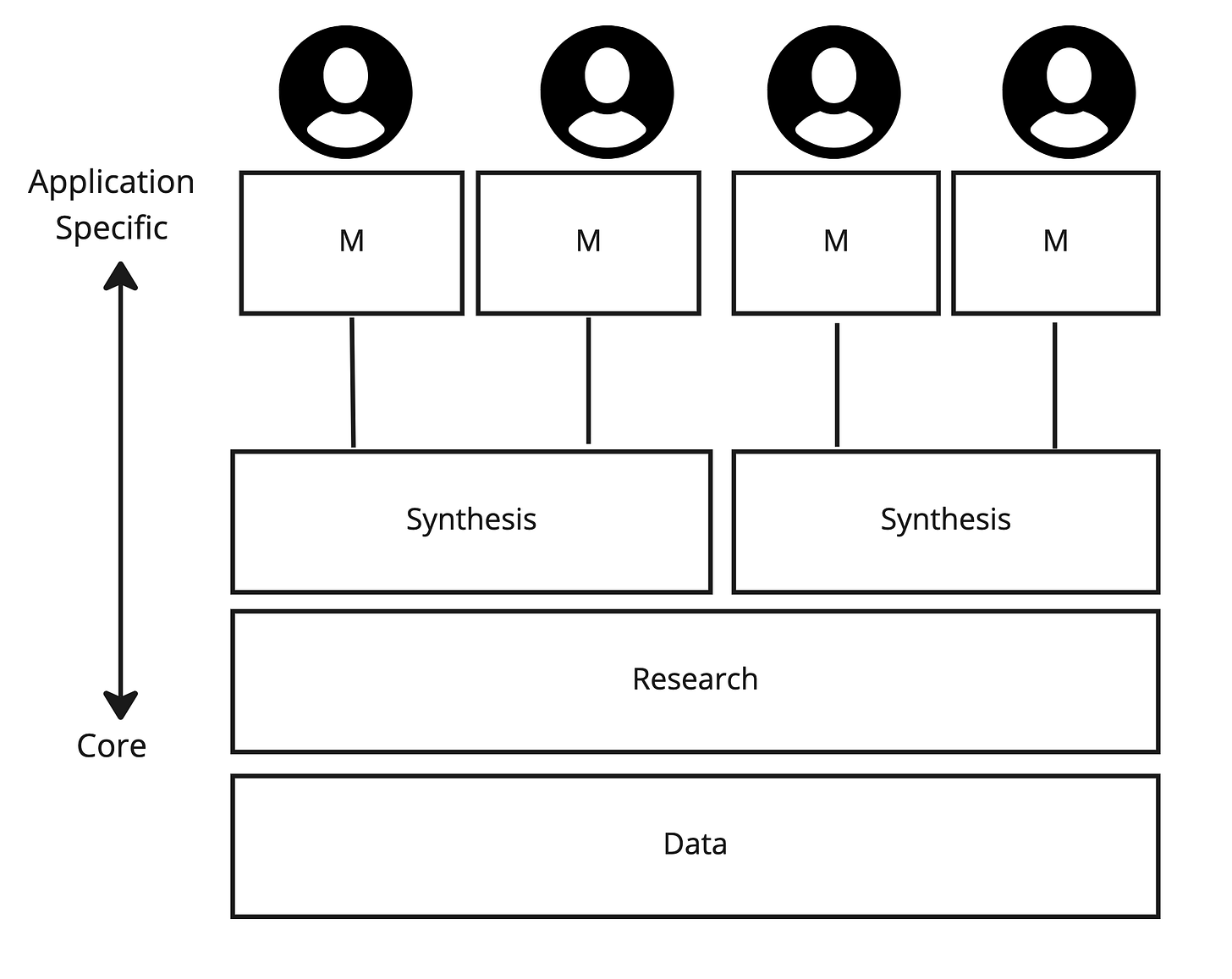

The second is what I would call the platform approach. Most models are the application of research and synthesis. You start to get something that looks like this:

Some parts of the "stack" stay very application agnostic. Other parts differ between applications. The benefit here is that you centralize the research—which imparts some consistency and congruence—and "build applications" on top of that research. This approach addresses one of the biggest challenges when maintaining multiple models—consistency where it matters.

Both approaches look for a win/win instead of a tradeoff. Use product (and platform) thinking for the models you use in your company. Focus on the job-to-be-done of each model, and layer models effectively.

Your models work for you, not the other way around.

One thing I have reservations here is the suggestion that the elusive quick, recoverable decision is better than the one that follows a process.

I get that in this article it is in service of explaining other concepts. That assumption, however, is very flawed. Here's why:

- assuming that decisions are reversible or easily recoverable: this is far away from showing agility and adaptability. This is used to justify decisions that lack an understanding or appreciation of consequence to everyone involved. It is taken on the basis of "I know best", the trademark of the one-trick pony; "I know enough", disregarding a sense of listening in a complex environment; "no need to consult", attempting to operate in isolation; "that we'll fix later", showing a lack of responsibility with the implications of the decision; "it's easier that way", which is a bias for convenience.

- assuming that even if they are reversible, a quick decision is better by default because it is action afterall. These are the people that believe that any reflection in business is a sign of analysis-paralysis. That speed to market and competitiveness demands anxiety and prompt responses

- one that this article is implicitly making: take the quick decision because models fail us in usefulness and delays afterall.

It doesn't mean that using gut instincts and heuristics are not helpful. They are massively important and liberating, reducing tension and managing stress. However, they are not to be used for every decision.

Suggestions that help us balance decisions:

- recognize complexity? Reflect first. Engage others. Communicate with clarity. Understand implications, and plan accordingly.

- in the universe of simple and complicated decisions, isolated and gut-driven decisions trumps consideration and engagement because they are faster. Recognize though that the problems we're paid to solve are not trivial, and this is not a path for solution

- empathize. Feel yourself in the shoes of the customer, the partner, the team that needs to rework things in the system because you've quickly decided is best. Consider what that does to the relationship. You'll see that your decisions are reversible to you, but they drive implications to others that may not be reversible.

- learn to match problem with the right model. Don't take shortcuts. Reflection early in your career will give you a clear sense of which tool to use later. It's a slow process.

- embrace failure, which requires not only reflection, but willingness to do thing differently. That will lead to better positioning yourself in the landscape of future decisions.

I realize I just wrote an article lol. Gotta publish this somewhere.

I like this visualization of how we use models. By pointing to the fact we may not be using the same models (or over-constraining to a model) is really great. I think this is why techniques like six hats work the way they do.

However, there is no way I will ever interpret ICP as anything other than Insane Clown Posse.