TBM 405: Hope, Context, and Control

This essay is about hope, fear, AI, and the tension between control and collective sensemaking.

I was reading an article about the state of SaaS recently, and came across this line:

The data moat surrounding incumbent SaaS / systems of record is likely overstated. I would argue that the most important context doesn’t reside in systems of records, regardless of their size, but in the minds and multimodal work of millions of users over time.

What struck me is how obvious this is to anyone (with a systems thinking, complexity-aware brain) in product development, and how counterintuitive it is to anyone outside of product development. I have long argued that tools barely scratch the surface when it comes to capturing real-world sensemaking, meaning-making, and decision-making. The same goes for the “official” frameworks used to manage companies. They do something, but it isn’t everything.

The tension is perfectly captured in James C. Scott’s distinction between legibility and mētis:

Legibility: the simplification of reality into standardized, abstract, and comparable representations so that institutions can see, measure, administer, and control it at scale.

Mētis: the locally grounded, experience-based, tacit knowledge that people use to navigate real situations, adapt to context, and make good decisions when the map no longer matches the terrain.

“System of record” or “source of truth” are squarely in the legibility domain. Whole segments of SaaS, especially in the operations management domain, are basically legibility systems. Either through integrations or data entry, they collect information, remove any real local context, and produce simple, manageable reports.

There’s a lot of record, and only a little bit of truth, but that truth is very seductive.

This might be fine if the promise of your product is an accounting system. Accounting systems are meant to be transactional and to ensure everything adds up. Or an asset tracking and mapping system, where you can’t lose anything. Or a support ticket intake system. Or a construction project management system.

But if the promise is actual outcomes in the complex world of product development, things get shaky.

Rollup Systems

Inside Dotwork, we refer to these systems as “Rollup Systems.” The basic idea of these tools is: “How can leadership and management see everything in one place, nicely rolled up, everything adding up, into neat and tidy apples-to-apples abstractions, such that everyone gets the warm and fuzzy feeling they are managing a simple or complicated system, and not an emergent, complex, sociotechnical system.”

Whenever we (at Dotwork) engage with customers, we go straight to rituals, interactions, decisions, etc., to avoid getting caught up in the rollup fixation. We go hard anti-rollup whenever possible. It’s hard, though, because there’s a seduction (and necessity) for legibility.

Every company has legibility systems. The real question is whether the system helps or harms, and what it is optimized for (e.g., risk reduction or innovation). The CTO who demands everyone does 2-week sprints might be doing exactly the right thing for the risk-averse, conservative bank that values legibility over the fruits of mētis. Or they might be, at best, condemning their team to wasteful local workarounds, and, at worst, denying the bank the ability to innovate.

Not to go there, but the team that uses SAFe might be reading the context perfectly: they need to stabilize the patient, and having a rule for everything is the exact right thing to do (and only real option). Or they might be dooming their team to sub-par results because of those rules.

Today In Tech

If you’ve been in any tech company recently, you know that things are tough at the moment.

Leaders are being told to “get into the details”, which could be framed as the anti-rollup approach. It’s not enough to look at reports from on high; you have to go deep and be hyper-aware about everything that is going on.

And at the same time:

Escalation systems are broken due to fear of layoffs, fractured company cultures, and what is frequently described as “Game of Thrones”. When crap like this is happening, you get a push for legibility as a form of defense. The front line has to live a double life, checking all the boxes while at the same time doing whatever they can to produce results locally.

There is strong pressure on near-term efficiency, cost savings, and doing more with less. You have to prove it as well, which means you have a strong push for legibility at the expense of reality.

There is strong pressure to innovate with AI, which involves a) a strong push for local mētis AND suddenly everyone wants to track every AI initiative, cost savings, and outcomes at the global level… so a push for legibility.

These tensions highlight the normal polarity between legibility and local context, but turn the knob up to 11.

And then let’s throw AI into the mix. A recent study challenged the notion that AI is valuable primarily because it allows people be more productive individually. Some key findings:

People talked to each other more, not less. Employees with the AI tool gained significantly more collaboration ties (+7.77 degree centrality vs. +1.12 for control) and knowledge-sharing ties (+5.21 vs. +0.84). The AI made human interaction more worthwhile. Good.

Specialists became knowledge magnets. Technical experts saw the biggest jump in being sought out for knowledge (In-Degree up 5.92 vs. 1.96 for generalists). The AI made deep expertise more valuable, not less; it helped people find and access the right expert faster.

Generalists shipped more. Sales staff (the generalists) saw the biggest productivity gains, completing roughly 28% more projects. The AI handled enough coordination overhead that integrators could actually integrate.

The network itself changed shape. The before/after network visualizations are striking: treatment group nodes (red) go from scattered clusters to a dense, interconnected mesh. The org’s informal structure literally rewired in three months.

The Tension

Notice the tension?

So what we’re seeing here is fascinating.

Optimism (“Humanism”?)

One can view this optimistically as a catalyst for collaboration, context sharing, and creativity, expanding the “mētis” boundary to larger groups. The systems of record we relied on were terrible at this, but that is because: 1) you will always be terrible at it if you are collecting records and fields and boiling everything down into transactions, and 2) there are natural limits to how far context can be shared without drowning a team in cognitive load.

Another optimistic angle is that leaders knew the information they were receiving was bad and craved more context. This is backed up by all the research I have done, which shows that leaders describe “something missing” in the reports they receive, even when those reports are comprehensive. The missing link was always depth and context. They sensed the gap.

So an optimistic interpretation is that, with care, we can engage more people in a shared context. Another optimistic interpretation is that AI supercharges the many interesting ways “technology” can help shape behavior for the better.

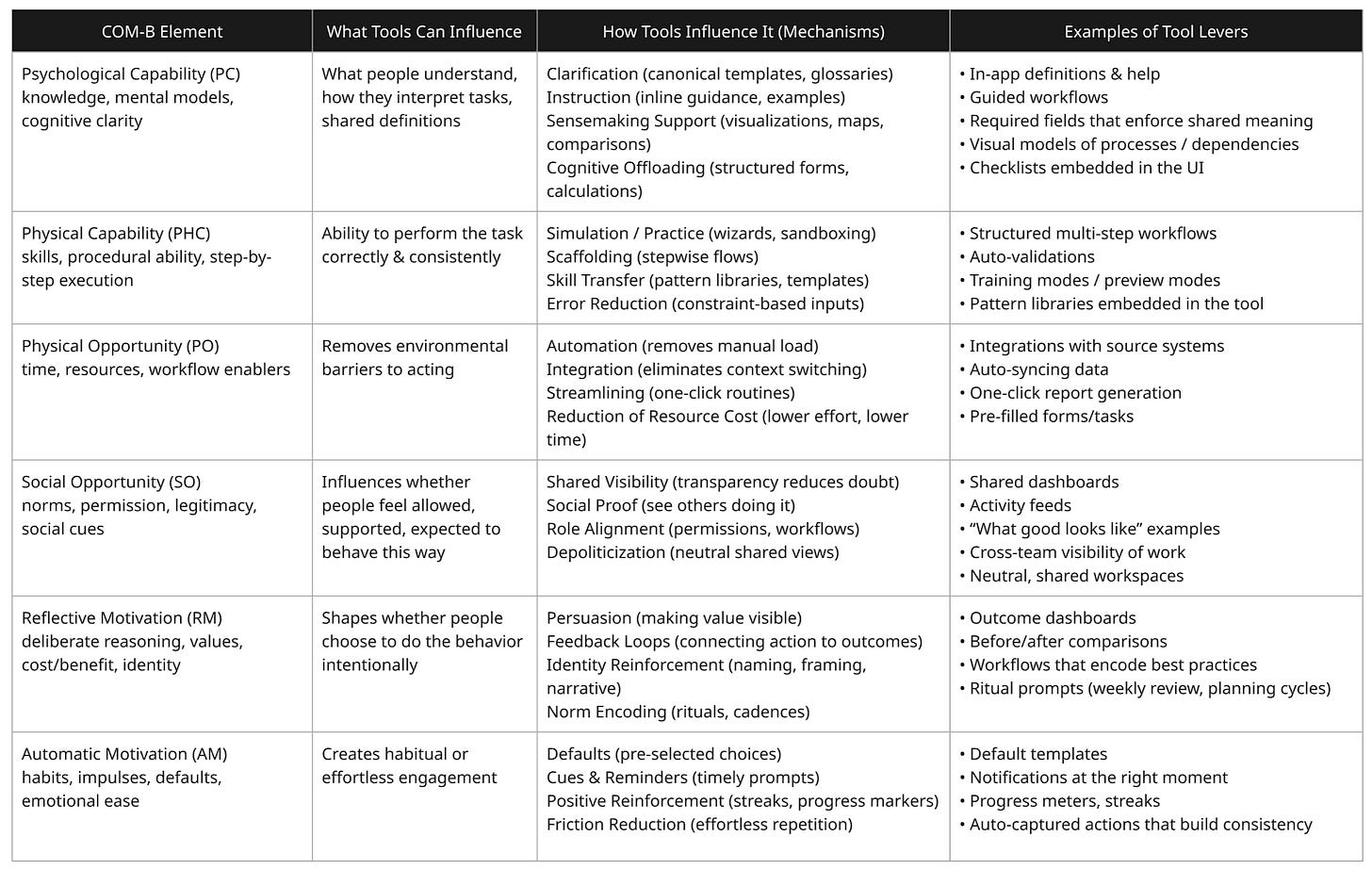

Consider all the levers we have right now where AI could help in one way or another:

Pessimism (“Realism”?)

If you winced at the last table, imagining all the bad stuff that could happen…

Or pessimistically: if you could just harness the hive mind of the people making creative decisions in your company, you could ultimately replace those people. That AI is, in fact, a catalyst for more legibility and top-down management, because “context is NOT the moat when it becomes ubiquitous.”

The pessimism here is that AI, and many of the people promoting AI, are primarily interested in control, not creativity or collective thriving. And now the very thing that local teams had that was valuable, their hive mind, is integrated into the “brain” of the company management system.

Stepping back to 20,000ft away from SaaS for a second:

Curtis Yarvin pushes ideas like handing national leadership to a CEO-monarch and creating corporate-style “freedom cities” without democratic governments.

Dark Enlightenment proponents see algorithmic elites (often aided by AI) as the only realistic stewards of order, dismissing grassroots dissent.

Long-termists advocate rigorous, quantitative decision‑making (e.g., “value-alignment” efforts) – an approach critics say risks becoming technocratic and inflexible.

Accelerationists often support central planning by AI-powered platforms and minimal regulation, trusting “progress” to purify society.

Across sectors and strands of thought, techno-authoritarian thinkers elevate centralized, algorithmic control above humanistic complexity. These aren’t fringe movements either. People who bought into these areas (with their wallets and political donations) are on product podcasts talking about how great everything is.

It is “mainstream” in tech. Let’s not pretend it is fake.

There’s much more going on than the “Yay, Best Of Times!” posts on LinkedIn. It is, and isn’t. And everything in between, and for different people.

My Hope

I desperately hope the optimistic future awaits. That, collectively, we become better sense-makers, meaning-makers, and deciders. That the natural physics of information entropy gets bent, and that we can share context across broader spheres and do more amazing work together.

There will always be some need for legibility, but maybe we’re on the cusp of bending the curve. Or not.

Just know you’re not alone if you feel this duality. It’s okay to be optimistic and pessimistic at the same time. To see real possibilities and still understand the depth of what’s at stake. To feel hopeful, but wince when people sugar-coat the situation or mistake clean stories for reality.

To quote Alex Komoroske

We have a choice. We can go down the path that we’ve been going down which is engagement maximizing hyperaggregation… or being aligned with our intentions and that could lead to a new era of human flourishing.

Wow - I’ve read probably 100,000 words on AI this week, and this was the most parsimonious. A similar way to frame the duality is a choice between reductionism and holism. Reductionism has been a driving force in our society for a long time. All of the major institutions that make up society have reductionists designs.

Then there is human reality. What we experience. Our individual imperfections which are magnified in social systems.

What’s wonderful is that AI actually presents the opportunity to redesign basically everything in a way that’s actually more congruent with human experience than the current systems.

That said, we are currently seeing how the technology works when it’s jolted into legacy systems.

Perhaps that’s what we should think more about - what do the new systems realistically look like?

Because it’s clear that AI is going to break legacy systems very soon.

Wow this is one of the most insightful pieces I've read recently. Nicely done!