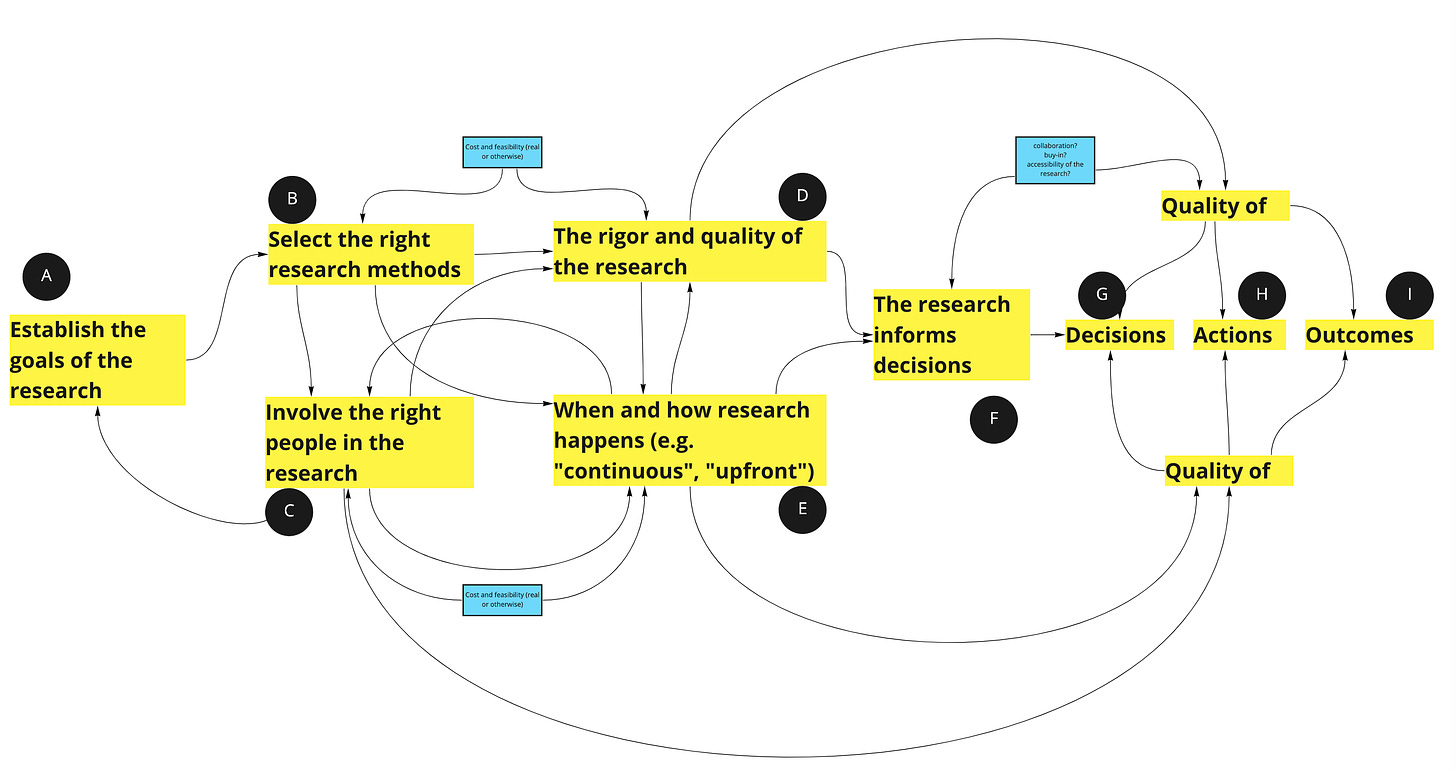

Here’s a high level model describing how research turns into outcomes.

A: We establish the research goal(s)

B: Given those goals, we select appropriate methods

C: Then, we decide who should be involved

D: The research happens with a certain level of quality and rigor

E: We also decide when and how it will happen

F: The output of the research informs decisions

G: Those decisions have a quality range

H: The resulting actions have a quality range

I: And the resulting outcomes have a quality range

What I find helpful about this model is that it can help teams have more productive conversations about areas for improvement. In my experience, conversations about research can get dogmatic VERY quickly. It becomes about the way, and not the why.

You start to see that surface level debates about upfront vs. more continuous research, qual vs. quant, and more/less democratized approaches to research aren’t capturing the pieces of the puzzle. “Successful research” is multi-faceted, with a number of factors—outside any one single person’s control.

Using this model as a guide, here’s some examples of where things go wrong:

We start with the wrong goal for the research. Nothing we do afterwards will matter (except pivoting the research).

We start with the right goal, but we pick the wrong methods. For example, we use a qualitative survey to do exploratory research instead of contextual inquiry. The quality and rigor of the research suffers, which impacts decisions, actions, and outcomes.

We do amazing research (using the right methods), but for whatever reason it never ends up impacting decisions. Perhaps the product strategy shifts rendering the research useless. Or teams are forced to prematurely converge on a way forward so they can “commit”.

The UXR team does a large, upfront research project due to a shortage of team members. Unfortunately, the research happens in a vacuum. No product managers, designers, or developers take part. This impacts downstream decision quality.

The team adopts continuous discovery methods. The methods are effective at involving people in research, but end up with less rigorous, lower-quality research.

All the “right” things happen—method selection, rigor, inclusion, and good decisions. But the team can't act because they are struggling under a mountain of tech debt.

Where else? Where is the current bottleneck at your company? Does it move around? Why? Who isn’t listening to who? Who doesn’t believe who? How are past experiences on past teams biasing people in the present?

I was chatting with a product leader recently who was adamant that “teams should do their own research!” It turned out that they had never worked alongside a skilled researcher. Their perception was skewed. They were biased to quantitative data, partly because they were not skilled in working with qualitative data. Yet they did correctly identify an important failure mode—the risk of team members becoming order takers, and not actively seeking out interaction with customers.

On the flip side, in my conversations with skilled researchers (UXRs, etc.) I often hear the other side of the coin. The problem, in their eyes, is a lack of rigor. They notice the “obvious” mismatched methods, and the poor execution of the research.

“Continuous” research feels rushed, and rife with confirmation bias. Every professional bone in their body is yelling “this isn’t how we should do it!” “Why should we be conforming the pace of research to development cadences?” Unless they have enough team members to truly embed on teams and “empower citizen researchers”, they are more apt to be protective of the process.

Both examples speak to the difficulty of having productive conversations around research. I’ve heard product managers flatly discount any and all qualitative research chops (“anyone can learn how to interview”). And UXR’s flatly discount the ability of anyone to do research (“well, I guess it might be good to interact customers but they aren’t actually DOING RESEARCH”). They can also refuse to explore the value of lightweight and more inclusive approaches.

It gets defensive…fast.

No breakthrough insights on my end, but maybe talking through the above model can help at least put names/words to the pieces of the puzzle, and figure out where people are seeing things differently.

To start, where is your team currently experiencing challenges? Talk it over…

John, firstly thanks for all your content, I can't tell you how valuable it is to me on my product lead journey. Ironically have set a day off today to think about ways to get our process back on track and stumbled across your latest post.

A question if I may, not sure if you can reply here, Does the learning list have a place on your roadmap template , if so where, if not just an artifact / doc with the rest of the bet info ?

Thanks a million

Thanks for this insightful summary of the current discourse 👍🏽